how to make research design in qualitative research

The case for iteration in qualitative research design

By Nhu Le, Heather Lanthorn , and Crystal Huang

Acknowledgment: This piece was written for quantitative researchers who have experience in rigorous quantitative techniques, but are interested in understanding rigorous qualitative approaches. Please add any questions you have in the comments and we will be happy to clarify and further discuss them.

IDinsight's mission is to use data and evidence to help leaders combat poverty worldwide.

In pursuit of this mission, much of our work uses quantitative methods, often to estimate a program's effectiveness through "decision-focused" evaluations. But a program's effectiveness is deeply intertwined with context and process. Carefully understanding these components is necessary for informed decision-making.

While some elements of context and process can (and should) be described quantitatively, qualitative tools can elaborate, deepen, and broaden this understanding and clarify the mechanisms involved. Rich, thick description can help us explain what is happening in one setting, and can also facilitate comparisons across settings. IDinsight has increasingly incorporated qualitative work in our engagements, and clients have found that these insights enrich their understanding of a program's processes and effectiveness or shortcomings, and help them make better-informed decisions and actions to combat poverty.

While there is growing interest among development researchers and practitioners in using 'mixed-methods' (both qualitative and qualitative methods, as described in here , here, and here) and traditionally quantitative researchers are increasingly 'qual-curious' (such as here, here, or here), practical how-to advice on doing qualitative work, particularly for those more familiar with quant, remains relatively limited.

In this post we provide some nuts-and-bolts advice for doing qualitative, descriptive work for researchers who are more familiar with quantitative data collection procedures. We focus specifically on iteration: incorporating what you learn at one point in the research into the remainder of the research, instead of following rigid linear steps. It is key to generating richer and more useful qualitative data.

We consider what iteration means, why it is useful, and how to do it effectively — and illustrate how to better plan for iteration by budgeting time for a) reflection and debrief, and b) flexibility for observation. We use the case study of our Investing in Women project to illustrate how one IDinsight team put this into practice.

A quantitative approach to collecting and analyzing data

If you have mostly worked on large-n, quantitative projects, you're familiar with a research process like this:

The various steps — theory construction, setting research instruments, and planning the sample — are fixed before data collection begins. This ensures rigor, which is achieved through reproducibility. Data collection precedes linearly to data analysis, with collection completed before analysis begins (excepting quality checks) to reduce bias.

While field research always contains surprises, the process is generally predictable: you typically know the characteristics of people you will be interviewing beforehand, your sampling frame is pre-determined, and you go about sampling respondents from it; and any individual respondents' responses are limited to the set of options provided in closed-ended questions (with the exception of a smattering of 'other, specify').

A qualitative approach to collecting and analyzing data

In qualitative work, rigor is pursued differently, because what counts as a "complete" dataset, or analysis completeness, differs when you are working with smaller samples and open-ended questions.

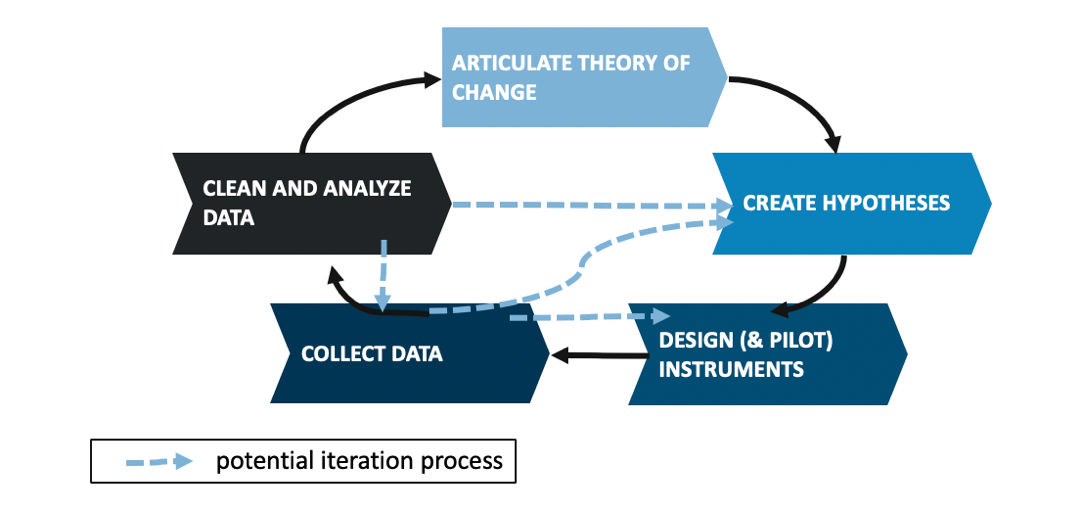

This means our linear, predictable process suddenly looks more like this (!):

The overlapping, looping process illustrated above is the result of thoughtful flexibility and iteration. What is iteration and why iterate?

Iteration means incorporating what you learn at one point in the research process into the remainder of the research. During iteration, we make use of data captured in almost-real-time to update and improve on our theory, methods, and sample in pursuit of a more complete understanding of a context or phenomena.

The unpredictable nature of qualitative data feeds the iterative process. Unexpected information that emerges during data collection can be used to adapt your methods and sample to better capture and explore further insights. For example, you can incorporate into the interview guide a new probe that worked particularly well at eliciting a rich response, or you can explore a new hypothesis generated by a response in future interviews or observations.

Look back at the 'spaghetti' diagram, above, of the qualitative research process. The light blue, dashed arrows show where iteration might happen. For example:

- Revising or dropping interview questions due to new information

- Adjusting or supplementing the respondent sample to include previously unknown individuals with relevant knowledge or unique experiences

- Going back to previous respondents to clarify responses

- Expanding interview questions and/or research questions to new areas of interest

- Adjusting hypotheses and theory, which may mean updating your codebook for further analysis (if your analysis method involves thematic coding)

Getting concrete: planning for iteration

Allowing for this adjustment and expansion requires building in time for the relevant people to review and reflect on data as it is coming in, to begin early analysis of emergent patterns, and to revise your approach accordingly. We have two key ideas for this:

1. Budget in time to reflect, debrief, and adjust

2. Budget in time for flexibility, to speak or observe more people and processes or to revisit those already studied

We elaborate and illustrate these points by drawing on an ongoing project with Investing in Women.

Investing in Women is a program in Southeast Asia funded by the Australia Department of Foreign Affairs and Trade (DFAT) that, as part of its broad program, aims to improve workplace gender equality by supporting business coalitions for women's empowerment in four countries: Indonesia, Myanmar, the Philippines, and Vietnam. We built case studies of several member firms of business coalitions using semi-structured interviews, in order to explore the state of workplace gender equality and the progress of gender equality-related activities at the firms. Our results will help Investing in Women more effectively support the business coalitions and their member firms to pursue increased gender equality and women's economic empowerment.

1. Budget in time to reflect, debrief, and adjust

Iteration requires time, ideally between interviews and observations, but at least between days of data collection, to reflect on what was collected.

This time allows you to get into the weeds (what details did we miss and should check?) and also to stand up and look at the forest (is what we are collecting both interesting and useful?).

First, get into the weeds. The data collector should review notes (and recording) from an interview, and "expand" their notes. This facilitates three practices: cleaning up your data, to ensure it makes sense in a week's time and to give yourself the means to interpret the data more deeply; identifying remaining questions, in order to prevent "we should have asked that!" face-palms after you have left the data collection site; and reflecting on what worked on a question-by-question basis, to guide small tweaks to a question's phrasing or delivery to build in what you have learned.

Then, get out of the weeds (individual questions and interviews) and look at the forest (your overall project and the decision goals). The data collection team as well as other relevant project members should regularly debrief. These other members might be the lead researcher (principle investigator) who designs and manages the project, other researchers, and even implementers of the intervention, if appropriate. Note that this means allowing time and resources for more senior-level research team members to be involved in this process, whether in directly collecting data or debriefing about it — it pays off for them to be familiar with what you're finding in your data, because they have the authority to make adjustments. Reflect on what was interesting and surprising, what was a dud, what patterns are emerging, whether the information was useful, and how it all relates to understanding a theory and the context.

Project Spotlight

Here's how we applied this tip to our work Investing in Women:

-We scheduled 30-minute breaks between interviews to discuss the previous respondent, and to clean and expand field notes while everything was still fresh.

- We had end-of-day debriefs with the research team. This meant planning for a slightly shorter day of data collection.

- We scheduled respondents to front-load individuals that we thought would inform later data collection. We knew HR employees would have useful insights, and we were able to build these insights into the questions we later asked general employees.

- We scheduled informal follow-up interviews with the member firm's contact person wherever possible. This enabled us to rapidly answer questions that arose during field work.

2. Budget in time for flexibility, to speak or observe more people and processes, or to revisit those already studied

Good qualitative work aims for sample appropriateness and adequacy, rather than for a specific sample size. Appropriateness reflects having respondents "who best represent or have knowledge of the research topic." Adequacy relates to how well we understand a phenomenon, and is partially determined by the characteristics of the respondents (which we may partially know through the hypotheses about heterogenous experiences we developed prior to data collection), and the information they give (which will only reveal itself over the course of data collection).

In an ideal world, we would be able to spend lots of time in the field, iterating on collection and analysis until we felt we had a complete picture. One way we would assess this completeness would be by reaching the point of 'saturation' — that is, hearing the same things over and over and not gaining a lot of new information from each additional respondent. Unfortunately, most research projects do not have the timeline or budget for us to spend as much time as we would like to ensure we achieve sample adequacy.

Project Spotlight

This was the case with the Investing in Women project; we had a limited amount of time to spend in each firm, so we had to be creative about how to include known 'key informants,' those known to have information on the topics of our inquiry, and still provide ourselves with the flexibility to capture additional 'information-rich' cases. To do so, we used a combination of purposive sampling and snowball sampling.

Most of our respondents were selected purposely — that is, non-randomly, based on characteristics that are known to be relevant to the study. For instance, we knew we wanted to include HR employees because they were likely to have direct visibility to the company culture around gender equality and related firm activities.

However, we also kept open a few "snowball" interview slots. "Snowball sampling" is one purposive strategy, which allows you to learn about, and then seek out, well-informed respondents along the way. It is particularly useful for situations in which there is limited information to guide sampling before field work. For example, in one firm, we learned that one department head was instrumental to ensuring the success of a particular gender initiative. We selected her as a snowball participant. In another firm, initial interviews suggested that the firm's employees either worked regular office hours or in shifts, and that the nature of work affected their perceptions of workplace gender equality. We used our snowball slots to select employees with both types of work arrangements as interviewees.

A full investigation of qualitative sampling strategies is beyond the scope of this post. Suffice it to say, when you are working with a relatively small-n, you need to be thoughtful about how to compose your sample.

how to make research design in qualitative research

Source: https://medium.com/idinsight-blog/the-case-for-iteration-in-qualitative-research-design-e07ed1314756